SPARQL is an important step forward, a valuable tool for the handling of RDF stores - I'll not dispute that. However, SPARQL has also been hailed as the query language for the Semantic Web, the solution to the problems of accessing Semantic Web data - and that it isn't. I'll tell you why:

(1) SPARQL Interfaces and Computational Cost - Lots of websites on the web today are offering some kind of interface to access their data; almost none*, however, are offering SQL interfaces. The most important reason for this is probably the fact that SQL query evaluation can impose a serious and hard to control computational burden on the servers of the company supplying the data. SPARQL isn't changing this - in fact, SPARQL even encourages more complex queries (assuming the queries are evaluated against a relational database). So it is hard to see why companies that aren't offering an SQL interface will start doing so with SPARQL.

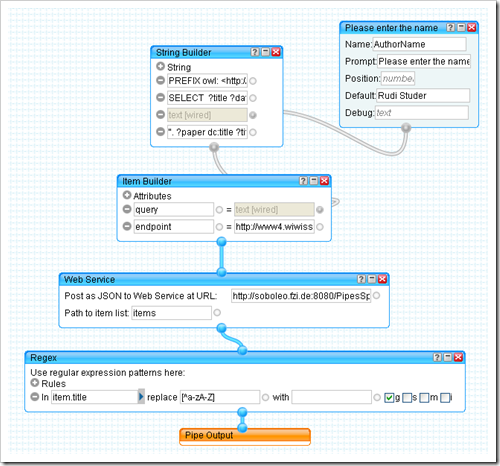

(2) The Problems of Large Scale Federated Search - A Semantic Web search engine is getting a query, its doing a bit of query processing, asks queries to the SPARQL endpoints it knows, aggregates and reasons with the answers it gets and finally returns a result to the initial query. That's federated search in a nutshell - and it isn't going to work; not on web scale and not simply. The problems with this approach are response time and query routing. Response time because this Semantic Web search engine is going to be SLOW - its speed limited by the slowest SPARQL endpoints it has to access (plus the fact that it has to do a lot of network access). Query routing is a big challenge because the Semantic Web search engine would need to be very specific about the SPARQL endpoints it asks for an answer to a particular query - if it isn't it is going to overwhelm the endpoints with traffic very quickly. Or what would you say if your site's SPARQL interface suddenly got a request for 1% of all Google searches?** - and that possibly without reimbursement.

(3) Not all triples are equal - SPARQL knows two kinds of triples: those that exist and those that don't. Answering queries over diverse RDF data created in an uncontrolled, distributed way, however, will also need some weights on the triples, based on how often they have been stated by whom. Assume that there are 5000 sources stating (USA is_adjacent Canada) and 4 stating (USA is_adjacent Uzbekistan) - do you then really want to treat these two triples equally?

(4) Pedigree matters*** - As I understand it, SPARQL assumes one global graph of all RDF statements. This is problematic because it allows even just one malicious file to "infect" everything. In traditional retrieval when you have one malicious file you'll have one bullshit and n-1 normal results. Assuming one global graph just one file can make all results unreliable.

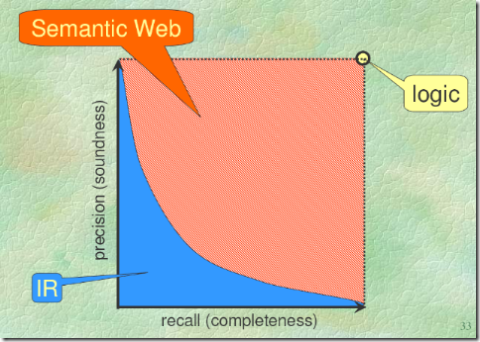

Conclusion - So in the end there is reason to doubt that many websites will offer SPARQL interfaces (1), and even if they do it will be difficult to use them to answer queries (2) . Assuming these problems could be overcome, SPARQL still has a model that is purely boolean (3) and that assumes one global graph (4) - both notions inappropriate for web scale query answering.

And yea - nothing of this is entirely new and nothing a 100% certain showstopper, its possible that all problems could be overcome. But all this should just serve as a reminder that SPARQL isn't the Semantic Web query language, at least not yet.

*: Facebook being the one notable exception, it offers an interface using a powerful query language (although it isn't SQL).

**: In fact publicly available data tells us that this would be only roughly 24 queries/second, but that number is almost surely much too small.

***: Yea, I know, a more appropriate title could be provenance or lineage, but I wanted to emphasize a slight difference in the concepts - that I'm not interested in where each statement came from as much as which statements stood together.

Labels: SemanticWeb